Considerations for Database Privileged Access

Individuals with privileged access have the technical means to bypass the user interface to access and modify data, often times without traceability.

Computerized systems used in the GxP world (e.g., good manufacturing practice or good laboratory practice), call for strict controls to maintain data reliability and integrity that protect product quality and patient safety. While these controls come in various forms—such as technical controls and checks, procedural controls, and audit trail reviews—organizations often overlook the back door: individuals with privileged access to the database.

“Privileged access” is enhanced permission granted to perform administrative tasks that require additional system or application visibility, such as issue resolution and system modifications or development. The term also applies to legitimate transactional data corrections under appropriate approvals and change documentation.

Individuals with privileged access have the technical means to bypass the user interface to access and modify data, oftentimes without traceability. It is advisable, therefore, to place additional controls and safeguards around privileged access. Modern database engines offer granular access controls and database-level audit trail functionality, both of which can extend data integrity controls to the database layer.

It is imperative that those with privileged access are trained to realize that controlled access is related directly to patient safety; they should also understand the risks they assume if they participate in illicit activities or knowingly allow them to occur.

This article discusses considerations for privileged access—data categorization and levels of control that should be implemented to protect the integrity of the data that resides in the database.

DATA CLASSIFICATION

To protect your data, you must protect your database. Whether traditional, back-end, or cloud-based, databases are central locations that store data and metadata; this makes them prime targets for attacks. With the “big data” explosion and proliferation of data from legacy or emerging technologies (such as the Internet of Things), some of the biggest challenges organizations face today include the need for:

- An inventory of data and information asset owners

- An understanding of the data and its value

- A framework that mandates different levels of protection and control implementation based on a structured and well-defined approach

The good news is that a well-defined data-classification process and framework will provide solutions for these challenges.

Data classification is the process of assigning an economic value or rating to data. Examples include identifying and rating sensitive databases, tables, or columns, and identifying restricted or confidential information in database storage. Data classification determines how organizations understand and manage business processes at the most elementary level. It is a fundamental element in data protection and in both enterprise data management and data governance.

As the first step toward data protection, data classification includes:

- Classifying a database data segment to allow tiered protection schemes and handling

- Encouraging proper labeling and handling of sensitive data

- Preventing unauthorized access to sensitive information

- Compliance with laws and regulations

The goal is simple: to create a classification framework that enables an organization to identity, label, and protect sensitive data in different databases. The framework consists of several components, including:

- Classification policies: Define scope, responsibilities, and other governance requirements

- Classification scheme: Determines tiers of data and associated levels of protection (Today, three- or four-tier schemes are common; five tier schemes are usually found in industries that produce intellectual property.)

- Labeling guidelines: Instructions for labeling that enable automated protection tools

- Handling guidelines for different data classification levels

- Classification mapping to link data types or data sets to classification levels

There is no one-size-fits-all approach to building a data classification framework, however. It should be based on the types of organization and overall data-protection strategy. At a minimum, a high-level policy that specifies the protocol for protecting sensitive data should be in place and link to the data-classification policy. Classification guidelines (i.e., labeling and handling guidelines) and compensating controls must be linked to each classification level. Data classification processes should be defined for consistent and repeatable execution. More importantly, because data and its value change over time, its sensitivity and need for protection also change. Data or database owners must keep classification guidance up-to-date.

CONTROLS

Computerized systems need risk-based network- and account-level security controls to limit access to the database. These controls include:

- Tiered system design

- Database connection restrictions for service account(s)

- Separate administrative accounts (which differ from user accounts for day-to-day work)

- Database connection restriction for database connection restriction for doing-business-as accounts

- Database connection (in transit) encryption

- Database encryption at rest

System access controls should be strictly managed, documented, and authorized, including enforcement of unique usernames and passwords that expire in accordance with the US Code of Federal Regulations (CFR) Title 21, Part 11 1 and other regulations. Where applications are hosted, individual user log ins may be imposed.

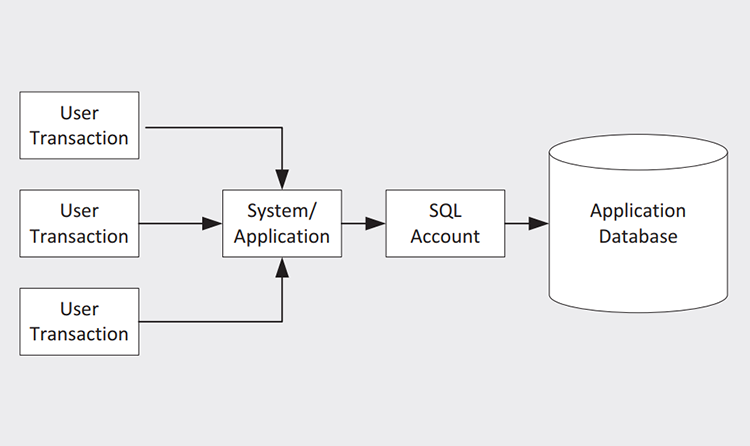

However, the application uses a generic account to access and store the data within the database/server (Figure 1). This is acceptable if the user who undertook the activity is traceable and each transaction is attributable.

Remote server access should be controlled in the same manner as in the system or application account, but additional controls should be established to record who needs access, the rationale for it, and the duration necessary.

- 1Electronic Code of Federal Regulations. Title 21, Chapter 1, Subchapter A, Part 11. “Electronic Records; Electronic Signatures.” https://www.ecfr.gov/cgi-bin/text-idx?SID=000ba713f162c3 eb2b065c62bd28c499&mc=true&node=pt21.1.11&rgn=div5

SEGREGATION OF DUTIES

In the world of pharmaceutical products, we would never have the same person manufacture a product and release it to market. Regulations require checks and balances, known as segregation of duties (SOD), to separate tasks and assign responsibilities to different people. In manufacturing, there are roles for people who create data (i.e., the manufacturing operators) and roles for those who approve or release data (i.e., the quality specialists). The SOD is clear: Different functional groups are responsible for the creation and the approval of data or records.

The same holds true when a computerized system automates a business process. We apply SOD at the operating system, application, and/or database levels. As previously mentioned, those with privileged access are able to change the way the system works, turn off the audit trail, or even change data that was created in the system—often without traceability. For these reasons, controls require another distinct person to perform these activities.

This person should not have any responsibility, accountability, or direct interest in the data and/or records that are created and maintained in the computer system and should be detached from the business process.

While SOD can be costly and/or inefficient, these controls should be applied according to a documented risk-based approach.

ACCESS CONTROL AND REVIEW

Access to computerized systems should be granted only to individuals who have been trained to perform the activity and have a legitimate business reason to access the system; a record of the request for access (or additional access) should also be documented in accordance with onboarding standards of practice (SOPs). Access for employees who leave the company should be revoked in accordance with SOPs, with no residual access available (i.e., the application access has been removed but the user-account related metadata remains to ensure the accuracy of the data and audit trail).

Privileged database account access should be minimal, in keeping with what Jerome Saltzer has described as “the principle of least privilege.” 2 If the database administrator account will be used by multiple individuals, a password storage database (password vault) should be established. This lets individuals use their own passwords (presuming they have sufficient permission) to log in to the system and prevents the administrator password from being revealed. Each access is recorded in the audit log.

“Controlled access is related directly to patient safety”

In smaller organizations, procedural risk-based controls should be in place so that access requests are recorded and approved before being granted. The password is changed after each use and stored in a secure location, whether physically sealed in an envelope or kept electronically in a password-management tool.

Review of the controls placed on privileged access is almost as important as the controls themselves. Privileged access may be temporary or permanent, depending on the nature and complexity of the access needed. It is essential that privileged access be revoked as soon as an individual no longer requires it: The system could otherwise be left vulnerable to unauthorized modification. Periodic review of user account and access privileges is essential so that only proper access is permitted. Supplementing this with automated monitoring is even more effective.

DATABASE AUDITING

For critical databases containing sensitive data, database auditing confirms that the company’s security policy supports data integrity. The most common database-level security issues are external attacks, unsanctioned activities by authorized users, and mistakes. Developing a risk-based audit strategy will confirm that appropriate security measures are in place, identify necessary improvements, and facilitate forensic analysis when an incident does occur. The strategy should audit the following:

- Privileged user access, to determine who has accessed the database, when access was obtained, how it was obtained (i.e., where it originated), and what data was accessed.

- Failed access attempts, which may indicate efforts to gain unauthorized access.

- Activities performed when access is gained; this audit may be performed at the statement, privilege, or object level, or it may be a fine-grain audit, particularly when a data integrity violation is suspected or identified.

- Suspicious activity, to identify any unusual or abnormal access to sensitive data

- Account creation, to ensure that all accounts with database-level access were created through correct processes and have correct permissions.

- Changes and deviations from the database policy and configuration. To be effective, the auditing process must be methodical and repetitive; it should be reviewed periodically to determine that it remains sufficient to protect data integrity.

CONCLUSION

Computerized systems in a GxP environment require strict controls, management, and documented processes to protect and maintain data integrity. Areas of concern include classifying systems and data; controlling access at user, administrator, and supplier levels; segregating duties between individuals and functions; and historical and ongoing auditing.

Systems and databases contain a large array of data, including confidential patient, employee, and customer information, as well as manufacturing traceability; therefore, organizations should classify their systems and the data contained within them.

Organizations should have defined, accountable data owners who understand the value of data and the level of protection required, and have a clear framework to apply controls. While each individual organization will have a different framework, data owners must understand that both the data value and the framework design change over time.

While it is essential that organizations manage system-level access with appropriate controls and processes, privileged access should be treated with the same—if not greater—rigor and thoroughness. Privileged access should be kept to a minimum and reviewed regularly to prevent data deletion or unauthorized, malicious modification. SOD between interested parties in relation to data owners—ensuring that there is no conflict of interest, for example—is also important.

Privileged access is a key component of overall database security, which should include controls on both physical and remote access, access audit reviewing, and controls on user application access, such as those described in CFR 21, Part 11.

Organizations should also implement an appropriate audit strategy to monitor, among other things, the use of privileged access accounts and internal and external failed access attempts, as well as activities undertaken upon gaining entry and their traceability to suspicious activities such as out-of-hours access requests. This audit strategy should be repeatable and reviewed periodically so that it remains viable and effective.

- 2Saltzer, J. H., and M. D. Schroeder. “The Protection of Information in Computer Systems.” Proceedings of the IEEE 63, no. 9 (Sept. 1975): 1278–1308. http://web.mit.edu/Saltzer/www/ publications/protection/